With the new year in full swing, I thought it would be a good time to revisit some core concepts for good measure. Backing up Cisco device configurations is easy if you have software that does it for you, but what if you don’t? We don’t, and trying to convince the people in finance that we should buy software to do something that doesn’t directly affect the clients is nearly impossible. So there I was, in search of something that could automate the process. For free.

MRAT (Multi-Router Automation Tool, if I remember right) has long been dead, but its uses live on. If you have ever used mrat.pl, you know that it does exactly what it says it can do, and it’s up to you to make it fancy. I’m going to run through how I decided to do it.

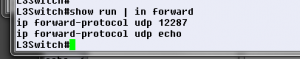

First, let’s have a look at the command line switches for mrat:

-r <routersfile>

-c <commandfile>

-o <outputlog>

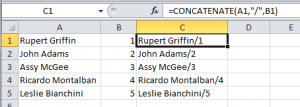

The output log is optional, but it can come in handy if you’re looking to obtain information about your devices. That leaves us with the routers file, and the command file. The routers file (which doesn’t technically have to contain routers) is a colon-separated list of values, including optional special variables of your choosing. You can scrub together a list fairly quickly with copy/replace if you have a flat list of IP addresses for your devices. Here’s an example line from my list:

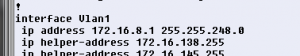

172.16.24.32:confusername::confpassword:172.16.1.2:172.16.24.32

This gives mrat the IP, username, password, and two additional variables (in this case: tftp server IP, and filename. I need the last two variables since I’m doing a config backup to tftp). I don’t use the hostname as the filename in this space because using the IP makes it easier to create the routers file. I will take care of finding hostnames later.

Now that I’ve got my switch/router list ready to go, let’s have a look at the command file. You can use any commands you want (I’ve used command files to gather information about IOS versions, router inventories, etc) but for backing up, we really only need to have a few simple lines in the command file. A command file example for config backup would look something like this:

copy run tftp://1.2.3.4/backupdirectory/filename

<carriage return>

<carriage return>

<carriage return>

exit

Notice that there is whitespace between the two lines (I typed <carriage return> to clarify). We need extra carriage returns to pass to the router. This will provide you with an easy way to back up a router config to a tftp server, but there’s a problem: the filename is static in this case (for that matter, so is the TFTP server IP). We can fix that. I put together a little bash script to streamline the process, and it goes like this:

########################################

# Enhanced mrat Backup Script         #

# Author: Jim                   #

########################################

cmdbudir=cfg-`date +%Y-%m-%d-%H%M`

budir=/tftpboot/$cmdbudir

tmp=backuptemp

########################################

mkdir $budir

chown nobody:nobody $budir

mkdir $tmp

########################################

echo copy run tftp://!var1/$cmdbudir/!var2 > $tmp/cmd

echo >> $tmp/cmd

echo >> $tmp/cmd

echo >> $tmp/cmd

echo >> $tmp/cmd

echo exit >> $tmp/cmd

########################################

echo -n mrat beginning...

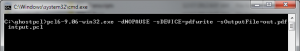

./mrat.pl -r swlist -c $tmp/cmd

echo done.

########################################

echo -n Renaming files...

for a in `ls $budir`

do mv $budir/$a $budir/`grep hostname $budir/$a | awk '{print $2}'`

done

echo done.

########################################

echo -n Cleaning up...

rm -rf $tmp

echo done.

This script is fairly straightforward. It assigns some variables in the first section for the target backup directory name, and some temporary variables. The next section creates a date-stamped backup directory on the server and a temporary directory based on the variables from the first section.

Next, we create a custom command file to be used with mrat (which is run in the next section). This command file includes the “!var1” and “!var2” variables in order to leverage the flexibility of mrat. I have used var1 in this case as the TFTP server IP, and var2 as the filename.

Section 4 is where mrat is actually being run with the routerlist (in my case it’s called “swlist”) and our custom command file. For the sake of clarity, I have added another section to loop through the files that have been backed up while grepping out the hostnames and renaming the files. This keeps us from having to sort through config backups by IP and instead lets us use hostnames. This is just preference, but I see a list of hostnames as an easier alternative to a list of IP addresses. If you have a proper addressing scheme for your management IPs, either way could potentially work fine.

Finally, the last section of the script is just cleanup. It deletes the temporary directory that was created in the second section.

When this script is finished running, you’ll have a date and time stamped directory that contains all of the config backups, with hostnames as filenames. You can take it a step further and gzip the configs right from the script, but since we have a relatively small shop I usually do that by hand. You could also cron this script and automate the process even further.

There are a few catches to this method:

1) If you don’t maintain the switch/router file, your backups will be incomplete. It is important to remember that the device list file must be maintained. I got myself in the habit of adding a new line to the swlist file every time a new device was added.

2) Make sure your devices are up and running before the backup runs. A non-responsive device will stall the script and cause mild headaches. I take a quick peek at the WhatsUp Gold network infrastructure section on my Home Workspace before I run this script to ensure that all of my devices are currently responding. If you administer a network of any size, you should be monitoring your devices for down situations anyway.

EDIT: The newest version of MRAT (0.63) fixes the non-responsive hanging issue! The newest version is available at http://www.serreyn.com/software/mrat/

Tagged Cisco